The algebra associated to SO(1,1)

The special orthogonal group sits inside the space of real matrices, and by taking the closure under scalar multiplication we obtain the set of all matrices of the form

This set of matrices forms an -algebra, and it is isomorphic to by having the above matrix correspond to .In Math 54, we are covering quadratic forms right now, and I wondered what this sort of construction would give for . Let’s set , so then a matrix is in if and for all . Let’s just solve for what form must have, since there’s a certain diagram I want to draw for later. Suppose

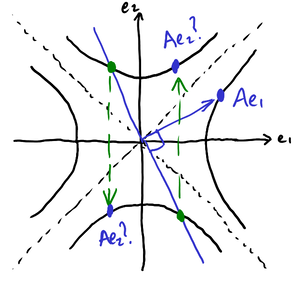

Plugging in to we get , and plugging in we get . The quadratic form has a corresponding bilinear form satisfying , and one can show . Hence, and , giving an additional equation. Geometrically speaking, this is that the columns of are orthogonal (via the dot product) after reflecting the second vector over the -axis. The following plot shows the hyperbolae and which respectively contain the columns of along with the two points on the second hyperbola that are orthogonal to .

Now let’s consider the corresponding algebra, which is from taking all scalar multiples of these matrices. The effect of this is to take all matrices of the form

with no constraints on and . (If I hadn’t done the above analysis, I would have been surprised to learn that these matrices form a commutative subalgebra!)The only two-dimensional algebras over are , , and , and it seems like it should be the first. (It can’t have nilpotent elements since the square of the above matrix has in the entry, and for a similar reason it can’t be since it lacks periodic elements.) How do we decompose it, then?

It’s easy enough to solve for a set of minimal orthogonal idempotents. They turn out to be the orthogonal projections onto and :

These certainly have the property that and (while also being well outside of proper!). Thus, we have a decomposition and furthermore an isomorphism to by sending such a matrix to .A consequence to this is that we get a sort of geometric rule for multiplication of matrices in . We regard a matrix in as being a point on the hyperbola . Step 1: take each point and project them onto the lines and . Step 2: think of these two lines as being (with and as the units) and multiply the correspond numbers. Step 3: “un-project” the resulting two numbers to form a new point on the hyperbola. (Or said more simply, rotate the plane by while scaling by , do component-wise multiplication of the points, then rotate and scale back. The fact that is closed under multiplication from this point of view is that if you have pairs and with and , then has .)

For example, since points of the positive sheet of the hyperbola have the parameterization , then under the assumption that this is supposed to be a homomorphism with behaving like an angle, we can recover some identities. Set and . Then and . That is,

Something to notice for the positive hyperbola is that the projections onto the two lines, as numbers relative to and , are always greater than or equal to . By taking natural logarithms, one can add instead of multiplying for the composition law. This corresponds to (Note: the base of the exponentials are not fixed by this observation.)

As a Clifford algebra

Another way to write is as , where the isomorphism from is from with . The corresponding isomorphism from the above algebra to is given by

so corresponds to the permutation matrix of order . Hence, the algebra is isomorphic to the Clifford algebra on a 1-dimensional space with a positive-definite quadratic form. (These are also known as the split-complex numbers.)SO(2,1)

Although it seemed unlikely, I wondered whether might give an interesting subalgebra in the space of matrices. It turns out the space is spanned by , so the answer is no.

It wasn’t necessary for this, but through a similar process I worked out a parameterization of the component of containing the identity:

This factorizes as